IBM Spyre Accelerator unleashing innovation

IBM (NYSE: IBM) has announced the imminent general availability of the IBM Spyre Accelerator, an AI accelerator that prioritises the security and resilience of core workloads while facilitating low-latency inferencing to support generative and agentic AI use cases. IBM declared the Spyre Accelerator’s availability in IBM z17, LinuxONE 5, and Power11 systems earlier this year. Spyre will be generally available for IBM z17 and LinuxONE 5 systems on October 28, and for Power11 servers in early December.

The current IT landscape is transitioning from conventional logic workflows to agentic AI inferencing. AI agents necessitate real-time system responsiveness and low-latency inference. IBM acknowledged the necessity for mainframes and servers to operate AI models in conjunction with the most demanding enterprise applications while maintaining high throughput. In order to satisfy this requirement, clients require AI inferencing hardware that preserves the security and resilience of essential data, transactions, and applications while enabling generative and agentic AI. The accelerator is also designed to allow clients to maintain mission-critical data on-premises in order to reduce risk and improve operational and energy efficiency.

The IBM Spyre Accelerator is a testament to the robustness of IBM’s research-to-product pipeline, as it integrates enterprise-grade development from IBM Infrastructure with groundbreaking innovation from the IBM Research AI Hardware Centre. Spyre, which was initially introduced as a prototype processor, was refined through rapid iteration, which included cluster deployments at IBM’s Yorktown Heights campus and collaborations with the University of Albany’s Centre for Emerging Artificial Intelligence Systems.

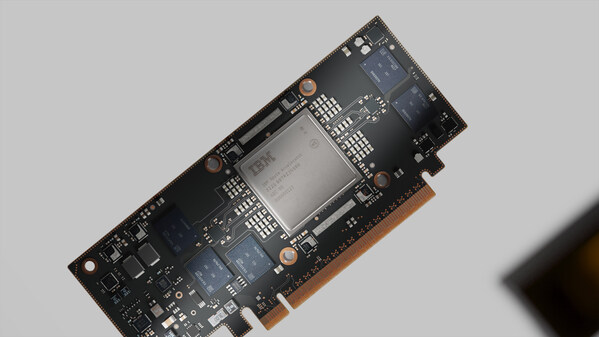

The IBM Research prototype has been transformed into an enterprise-grade product that is suitable for use in IBM Z, LinuxONE, and Power systems. Currently, the Spyre Accelerator is a commercial system-on-a-chip that contains 25.6 billion transistors and 32 individual accelerator processors. Each Spyre is affixed on a 75-watt PCIe card, which enables the clustering of up to 48 cards in an IBM Z or LinuxONE system or 16 cards in an IBM Power system to scale AI capabilities. This is achieved through the use of 5nm node technology.

“One of our key priorities has been advancing infrastructure to meet the demands of new and emerging AI workloads,” said Barry Baker, COO, IBM Infrastructure & GM, IBM Systems. “With the Spyre Accelerator, we’re extending the capabilities of our systems to support multi-model AI – including generative and agentic AI. This innovation positions clients to scale their AI-enabled mission-critical workloads with uncompromising security, resilience, and efficiency, while unlocking the value of their enterprise data.”

“We launched the IBM Research AI Hardware Center in 2019 with a mission to meet the rising computational demands of AI, even before the surge in LLMs and AI models we’ve recently seen,” said Mukesh Khare, GM of IBM Semiconductors and VP of Hybrid Cloud, IBM. “Now, amid increasing demand for advanced AI capabilities, we’re proud to see the first chip from the Center enter commercialization, designed to deliver improved performance and productivity to IBM’s mainframe and server clients.”

IBM clients can benefit from Spyre Accelerators, which provide on-premises AI acceleration and quick, secure processing. This is a significant milestone, as it enables businesses to utilise AI at scale while maintaining data on IBM Z, LinuxONE, and Power systems. When used in conjunction with the Telum II processor for IBM Z and LinuxONE, Spyre provides mainframe systems with improved security, high transaction rate processing capacity, and low latency. Businesses can utilise Spyre to scale multiple AI models to enable predictive use cases, such as advanced fraud detection and retail automation, by leveraging this advanced hardware and software stack.

Spyre clients can utilise a catalogue of AI services on IBM Power-based servers to enable end-to-end AI for enterprise workflows. The AI services can be installed by clients with a single click from the catalogue.1

The Spyre Accelerator for Power, in conjunction with an on-chip accelerator (MMA), also expedites data conversion for generative AI, thereby enabling the delivery of high throughput for deep process integrations. Furthermore, it facilitates the absorption of over 8 million documents for knowledge base integration within an hour, with a prompt size of 128.2

This performance, in conjunction with the IBM software architecture, security, scalability, and energy efficiency, assists clients in the integration of generative AI frameworks into their enterprise workloads.

Notes:1. deployment command can be used to deploy one or a group of containers containing one AI service from the IBM-supported catalogue. With a single click on the UI page of the corresponding AI service, the catalogue’s given user interface (UI) carries out these commands in the backend.

2. based on internal testing utilising a 1-card container and a 1M unit data set with prompt size 128 and batch size 128. The scale of the workload, the use of storage subsystems, and other factors may affect individual outcomes.

Picture Source: IBM